Concept for Using Persisted Anchor in Multiplayer MR Games¶

Introduction¶

In multiplayer Mixed Reality (MR) interactive games, players aim to interact with each other and virtual elements in a shared environment, blending the real and virtual worlds. Ensuring that virtual elements align accurately with the real surroundings is crucial. This document discusses the challenges faced in designing multiplayer MR games and how to ensure everything aligns properly to enhance player experience.

Challenges in Multiplayer MR Games¶

In these games, each player’s MR device understands its surroundings. Initially, players start from their own “birth points,” which are specific starting points in the virtual world. However, when players with MR devices facing different directions see the same virtual content, things get tricky. For instance, if one player faces north in real life and another faces south, they might see the same virtual scene, which can disrupt the game’s realism.

When a virtual object is one meter in front of a player facing north, the player facing south should perceive the object as being behind them. To align directions, we adjust the spawn point direction for the player facing south to be opposite to the virtual world. This establishes a consensus on direction for both players.

When players in reality are two meters apart, the player facing south’s spawn point is adjusted to be two meters in front of the player facing north. This maintains the relative relationship between the two players in reality.

To make these adjustments to spawn points, we need to know the relative relationship between the players in reality, which can be obtained through Persisted Anchor.

How Persisted Anchor Solves the Problem¶

As each VR device independently maps its environment, the maps are not shared between devices. VR devices cannot calculate the relative relationship between each other.

Persisted Anchor is created based on feature points of reality environment. When a user creates a Persisted Anchor, environmental information related to the anchor is stored in the anchor’s data. When this Persisted Anchor information is exported by host user and imported by other users, other users can match the real-world location of the anchor in their device’s maps.

Although each machine’s tracked coordinates for the Persisted Anchor are different, they are consistent in reality. When both user 1 and user 2 encounter this anchor, it means they have encountered each other.

By utilizing this relationship, we can align the virtual world accordingly.

Illustraing World Alignment with Graphics¶

We will visually depict the concepts discussesd in the previous sections through illustrations and diagrams to enhance understanding of world alignment in multiplayer MR contents.

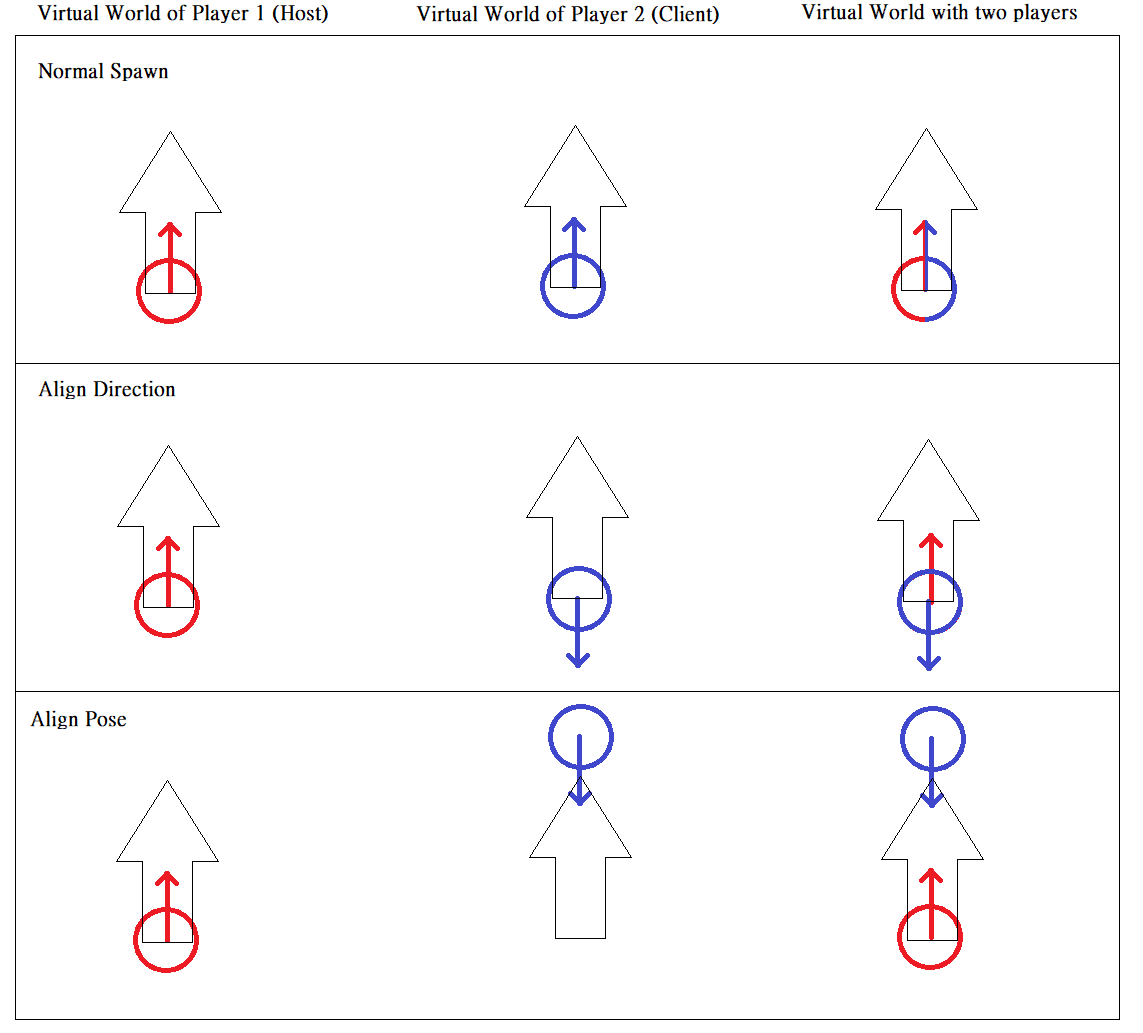

This represents Player’s Spawn Point:

An this representes the virtual world:

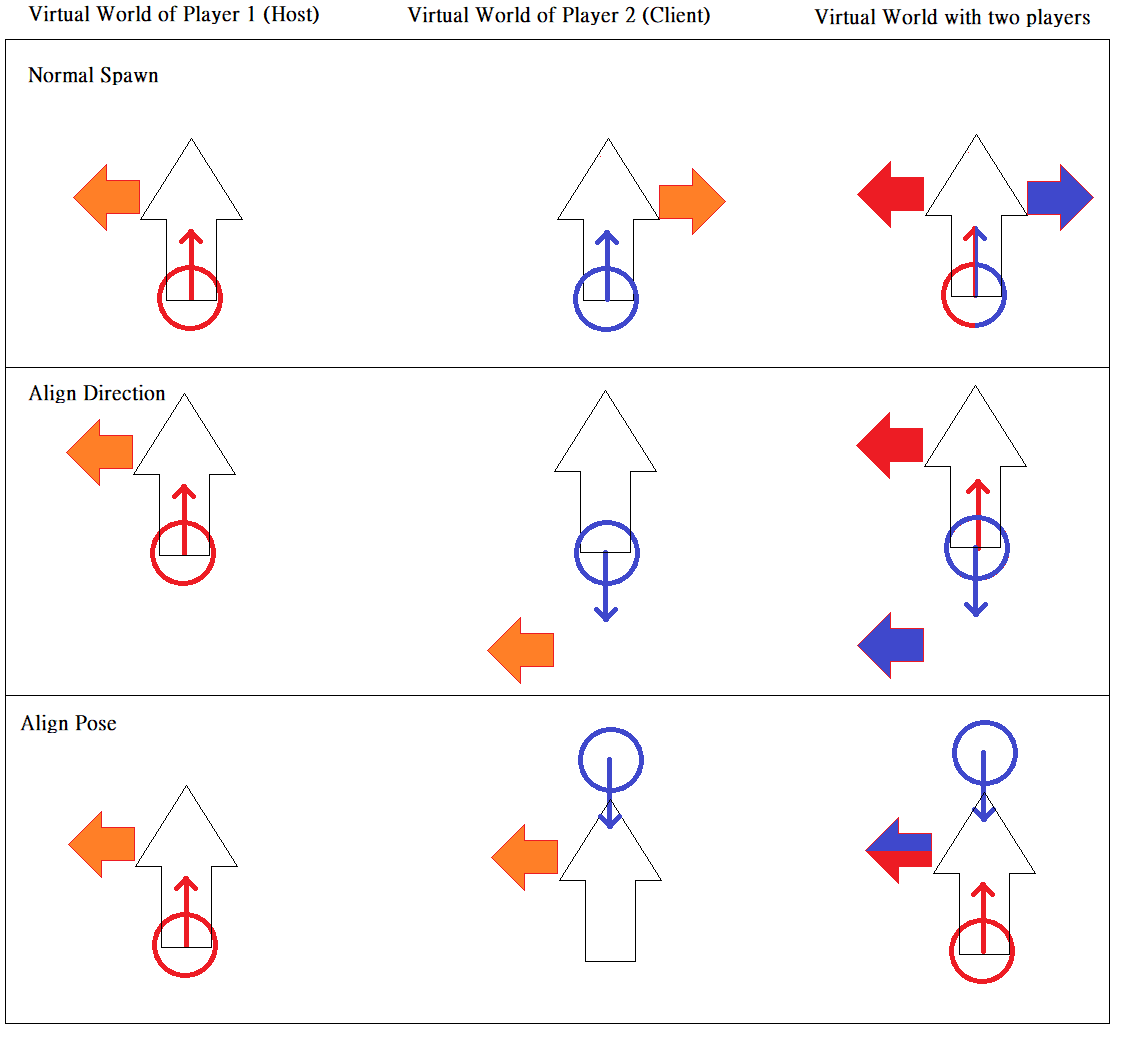

In reality, two player stand face to face. Red stands for Host player, and blue stands for Client player.

Both player spawn in the same virtual world. If they didn’t align the world, they will see the scene in the same direction. For example, they can both see the arrow pointed to the their forward.

If They aligned direction, or pose. The result will be the same as reality.

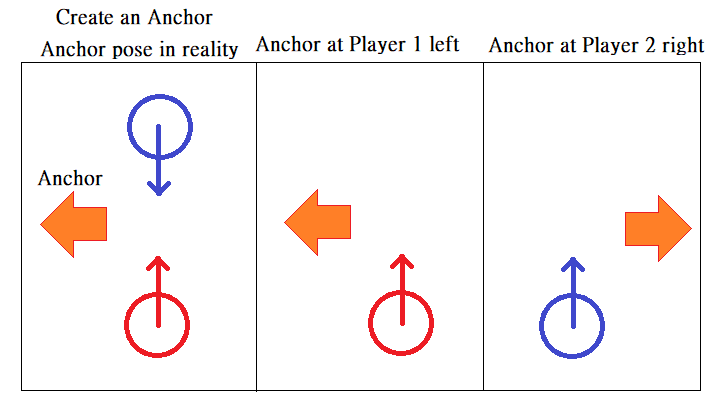

We will use Persisted Anchor to do world alignment. First, host player create a Persisted anchor at his left forward, and pointed his left. And that means this anchor is in client player’s right forward, and pointed his right. Second, send the persisted anchor exported data to client player. After clinet imported and tracking this anchor, The Anchor should be in client player’s right forward.

Now, we apply this relationship to the world aligment process. If we make both player’s anchor pose aligned in virtual world, the world alignment is complete.

In the illustrated here, we simplify many things. For example, the client’s tracking origin point will not be just in front of host’s tracking origin point.

How to calculate it¶

Assume all players are in the same world space. The world described in below pseudocode could be the world center (position(0,0,0)) or a world reference point.

The host player need send his persisted anchor exported data and his anchor’s world pose to other players. Other players will receive the persisted anchor data and import it to system. And use our anchor system to get the anchor’s tracking pose.

Pseudocode here:

// * Player2

// /| CameraRig

// / |

// Anchor / |

// Tracking / | World

// pose *----* Center

Matrix AlignWorld(Matrix player1AnchorToWorld, Matrix player2AnchorToCameraRig)

{

Matrix player2CameraRigToAnchor = player2AnchorToCameraRig.Inverse();

Matrix player2CameraRigToWorld = player1AnchorToWorld * player2CameraRigToAnchor;

return player2CameraRigToWorld;

}

// In client player's device

void DoAlignWorld() {

// Get player1AnchorToWorld data sent from host player

Matrix player1AnchorToWorld = ...;

// Import a Persisted Anchor and get it's tracking pose

Matrix player2AnchorToCameraRig = Matrix.TRS(anchorPose.position, anchorPose.rotation, Vector.one);

Matrix player2CameraRigToWorld = AlignWorld(player1AnchorToWorld, player2AnchorToCameraRig)

// Set client player's pose in world space.

player2.transformMatrix = player2CameraRigToWorld;

}

Unity Function and Sample¶

Please See For World Alginment in Unity Scene Perception .