WaveVR_ControllerInputModule¶

Older versions (2.0.23 and before) can be found here: Controller Input Module

A new version can be found here: WaveVR_ControllerInputModule

Contents |

Introduction¶

Unity has Event System which lets objects receive events from an input and take corresponding actions.

An Input Module is where the main logic of how you want the Event System to behave lives, they are used for:

- Handling input

- Managing event mechanism

- Sending events to scene objects.

This script supports an input module of multiple controllers.

Resources¶

Script WaveVR_ControllerInputModule is located in Assets/WaveVR/Scripts

Script WaveVR_EventHandler is located in Assets/WaveVR/Extra/EventSystem

Script GOEventTrigger is located in Assets/WaveVR/Extra

Other EventSystem scripts are located in Assets/WaveVR/Scripts/EventSystem

Sample ControllerInputModule_Test and MixedInputModule_Test are located in Assets/Samples/ControllerInputModule_Test/Scenes

Prefab ControllerLoader and InputModuleManager are located in Assets/WaveVR/Prefabs

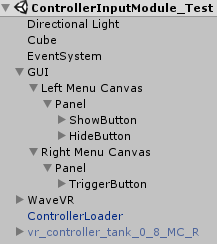

Introduction for the Samples 1¶

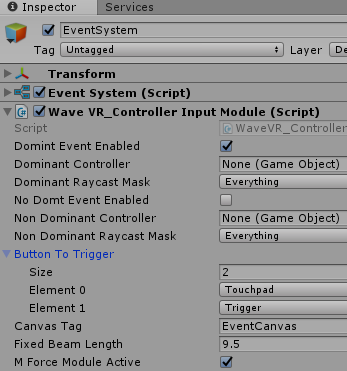

- EventSystem

The EventSystem will be automatically added if a Canvas is added to a scene or manually added by right-clicking on the Hierarchy panel > UI > Event System.

WaveVR_ControllerInputModule is added here to replace the Unity default “Standalone Input Module”.

There are 6 variables -

- Right Controller: Right controller GameObject. This can be empty if using WaveVR_ControllerLoader

- Right Raycast Mask: The Event Mask of the right controller’s PhysicsRaycaster.

- Left Controller: Left controller GameObject. This can be empty if using WaveVR_ControllerLoader

- Left Raycast Mask: The Event Mask of the left controller’s PhysicsRaycaster.

- Button To Press: Choose a button on the controller to trigger an event.

- Canvas Tag: The Tag of the canvas which can receive events of EventSystem.

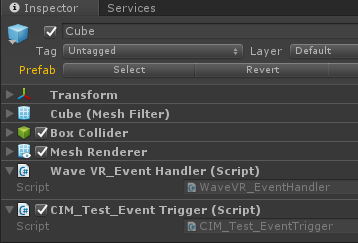

- Cube

WaveVR_EventHandler is used for handling EventSystem events .

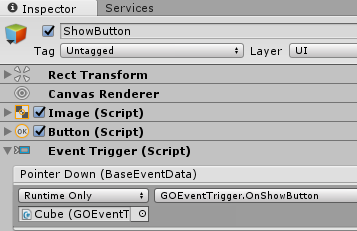

GOEventTrigger provides a variety of functions which can be triggered by other GameObject.

- GUI

- Old version (v2.0.24 and before):

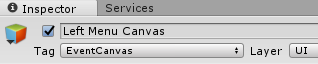

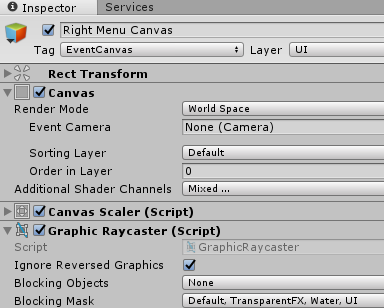

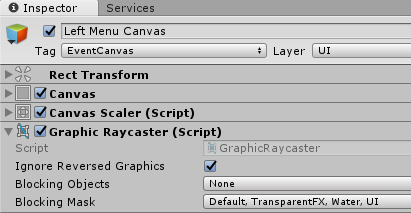

There are Left Menu Canvas and Right Menu Canvas beneath the GUI that both have the Tag value EventCanvas.

The tag is used to recognize the Canvas which can receive EventSystem events.

The tag must be equivalent to that in Canvas Tag of WaveVR_ControllerInputModule in EventSystem.

When using Canvas , Graphic Raycaster will be automatically added.

Graphic Raycaster is used to receive the events from EventSystem.

In ShowButton and HideButton of Left Menu Canvas, the Pointer Down event of Event Trigger is set.

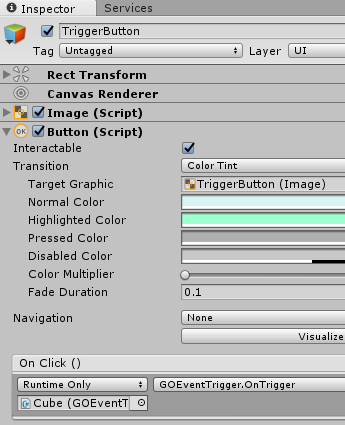

In TriggerButton of Right Menu Canvas, the On Click of Button is set.

- Current version:

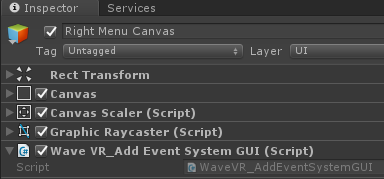

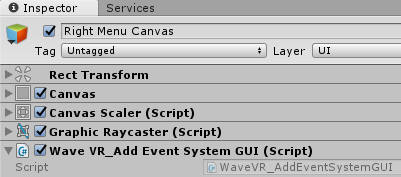

Instead of using Tag to recognize event GUIs, WaveVR provides a new script - WaveVR_AddEventSystemGUI to mark GUI being able to receive the events from EventSystem.

So now you have two ways to send events to GUI:

- Set GUI’s Tag and write tag value to the Canvas Tag field of WaveVR_ControllerInputModule

- Add the component WaveVR_AddEventSystemGUI to GUI(s).

These two ways can be used concurrently.

- Controller

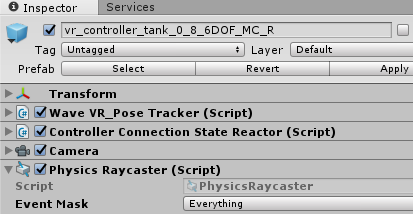

Right controller vr_controller_tank_0_8_MC_R is a prefab from Assets/ControllerModel/Link/Resources/Controller

In order to detect the physical objects, the Physics Raycaster component is added.

Physics Raycaster depends on the Camera, therefore, the Camera component is also added.

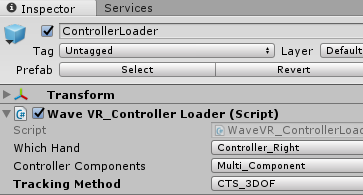

ControllerLoader is a prefab from Assets/WaveVR/Prefabs and you can refer to WaveVR_ControllerLoader

Note: In fact, we encourage you to use ControllerLoader to load the controller model of different controllers automatically instead of using a specified controller model (e.g. vr_controller_tank_0_8_MC_R).

In order to demonstrate which components are used in a controller model, we put vr_controller_tank_0_8_MC_R in this sample.

Of course, developers can design their own controllers thus ControllerLoader is not needed in this case.

If you use a custom controller, remember to set the controller to Right Controller or Left Controller field of WaveVR Controller Input Module.

Introduction for the Samples 2¶

This sample demonstrates the usage of InputModuleManager and a new event GUI script.

- Left Menu Canvas and Right Menu Canvas

As mentioned above, instead of the Tag of Canvas, WaveVR provides WaveVR_AddEventSystemGUI to mark GUI being able to receive the events from EventSystem.

We demonstrate Tag in Left Menu Canvas and WaveVR_AddEventSystemGUI in Right Menu Canvas.

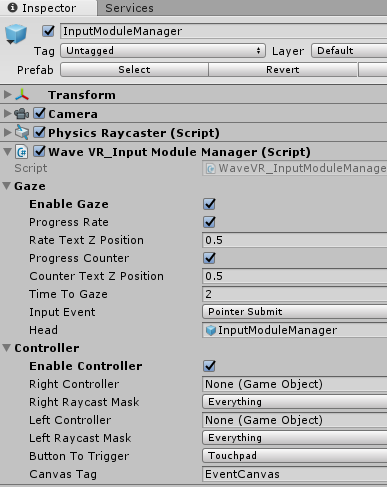

- InputModuleManager

This is a prefab from Assets/WaveVR/Prefabs.

You can enable the gaze feature by checking Enable Gaze and enable the controller input module by checking Enable Controller.

A gaze reticle will be generated automatically if Enable Gaze is checked.

A controller model is loaded by WaveVR_ControllerLoader and set as an event controller if Enable Controller is checked.

If Enable Controller is not checked, a controller model will still be loaded but cannot send any events.

If you use custom controller(s) instead of using ControllerLoader to load WaveVR default controller(s), it is necessary to drag the custom controller GameObject into field Right Controller or Left Controller and set the Physical Raycast Mask if needed.

Script¶

Work Flow¶

A raycaster is needed to send events. Unity uses the GraphicRaycaster for GUI and the PhysicsRaycaster for physical objects.

When an object is casted by the raycaster, the object will receive the Enter event and the previously casted object will receive the Exit event.

If the raycaster is hovering on an object, the object will receive the Hover events continuously.

// 1. Get graphic raycast object.

ResetPointerEventData (_dt);

GraphicRaycast (_eventController, _event_camera);

if (GetRaycastedObject (_dt) == null)

{

// 2. Get physic raycast object.

PhysicsRaycaster _raycaster = _controller.GetComponent<PhysicsRaycaster> ();

if (_raycaster == null)

continue;

ResetPointerEventData (_dt);

PhysicRaycast (_eventController, _raycaster);

}

// 3. Exit previous object, enter new object.

OnTriggerEnterAndExit (_dt, _eventController.event_data);

// 4. Hover object.

GameObject _curRaycastedObject = GetRaycastedObject (_dt);

if (_curRaycastedObject != null && _curRaycastedObject == _eventController.prevRaycastedObject)

{

OnTriggerHover (_dt, _eventController.event_data);

}

When the device key is released and the casted object is a Button, the onClick function of the Button will be invoked.

if (btnPressDown)

_eventController.eligibleForButtonClick = true;

if (btnPressUp && _eventController.eligibleForButtonClick)

onButtonClick (_eventController);

Change Event Camera When Graphic Raycast¶

The Canvas of a GUI has only one Event Camera for handling the UI events.

But you may have multiple controllers and they all trigger events to Canvas.

In order to make sure the Canvas can handle events from cameras of both controllers, you will need to switch the event camera before casting.

Considering that a scene has multiple Canvases, some have to receive events and others do not.

You only have to change the event cameras of Canvases to those that have to receive events.

For a fast way to use Tag, we provide a text field Canvas Tag in the script.

Then, you can easily get the Canvases:

GameObject[] _tag_GUIs = GameObject.FindGameObjectsWithTag (CanvasTag);

You can get the new event camera from a specified controller:

Camera _event_camera = (Camera)_controller.GetComponent (typeof(Camera));

Then, the event camera can be changed easily:

_canvas.worldCamera = event_camera;

Pointer Event Flow¶

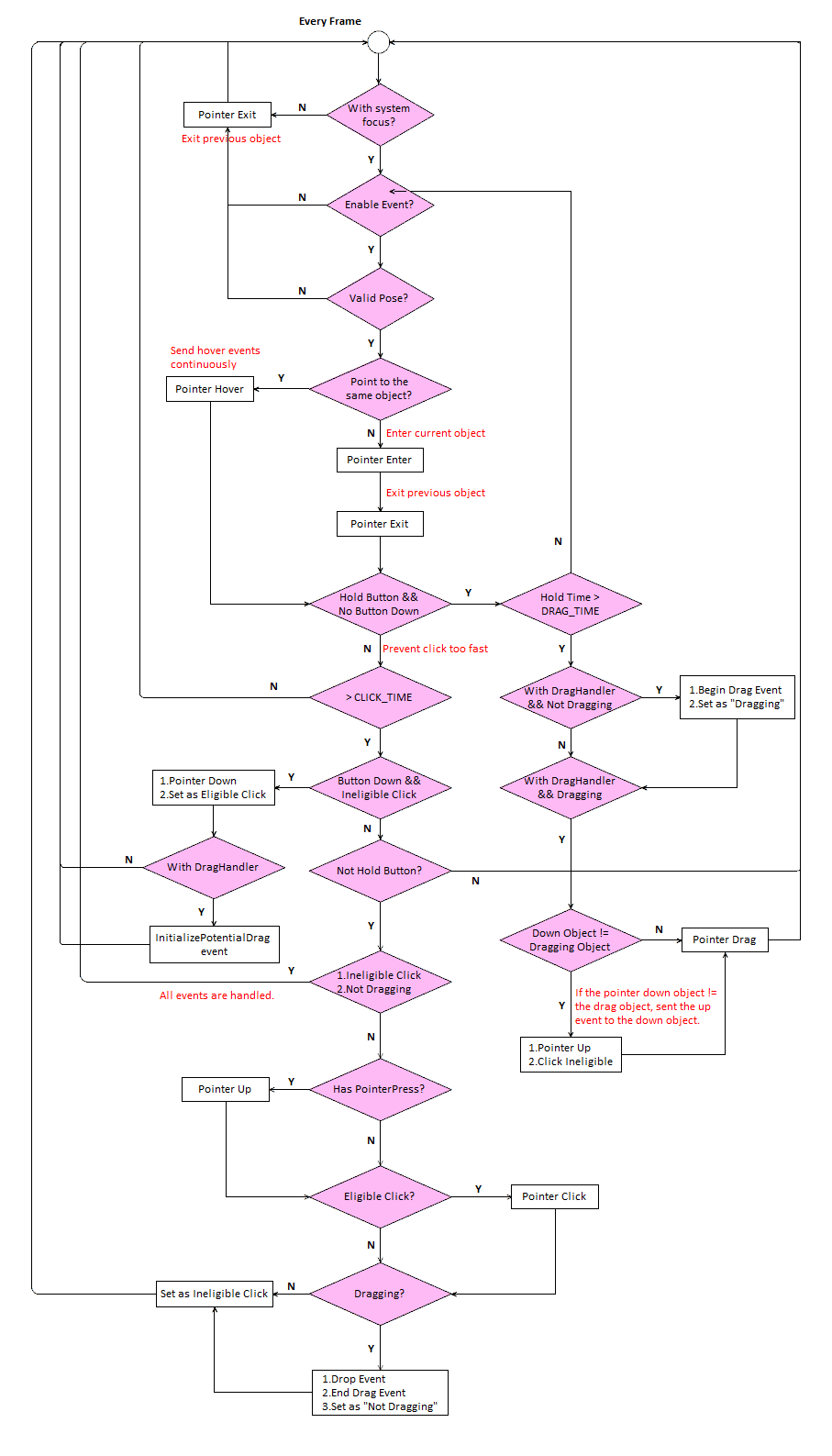

Except for the Enter and Exit event of Event Trigger Type , the flow of other events is:

Summary:

- When the frame of a button changes briefly from unpressed to pressed:

- The Pointer Down event is sent.

- The initializePotentialDrag event is sent to the object that has a IDragHandler .

- When the frames of a button are pressed:

- The beginDrag event is sent.

- The Up event is sent to another object (different with current object) that received the Pointer Down event.

- The Drag event is sent continuously.

- When the frame of a button changes briefly from pressed to unpressed:

- The Pointer Up event is sent.

- The Pointer Click event is sent.

- If the Drag event has been sent before, the Pointer Drop event and the endDrag event will be sent.

As for the Enter, Exit and Hover events, these events are not related to the button state so they have not been included in above flow:

- When raycasting an object, the

Enterevent will be sent. - When hovering over a raycasting object, the

Hoverevent will be sent. - When leaving a raycating object, the

Exitevent will be sent.

Custom Defined Events¶

WaveVR defined EventSystem is located in Assets/WaveVR/Scripts/EventSystem

WaveVR_ExecuteEvents is used for sending events.

IWaveVR_EventSystem is used for receiving events.

You do not need to implement the part of sending events, as it is managed in this script.

But, if you want GameObjects with custom scripts receiving events, the scripts should inherit the corresponding event interface:

public class WaveVR_EventHandler: MonoBehaviour,

IPointerEnterHandler,

IPointerExitHandler,

IPointerDownHandler,

IBeginDragHandler,

IDragHandler,

IEndDragHandler,

IDropHandler,

IPointerHoverHandler

IPointerHoverHandler is a WaveVR defined event which is not part of Event Trigger Type .