Unity Plugin Getting Started¶

The Wave Unity SDK provides the integrated plugins for Unity contents, which can manipulate the poses of the head and controller by importing the Wave Unity plugin’s scripts. Also, the Wave Unity plugin’s render script can easily turn the main camera into a stereo view camera for VR.

We assume that you already have the essential experience to develop an Android app using Unity IDE and the knowledge of C# language.

Contents |

Set up your development environment¶

The target platform of VIVE Wave™ is Android. You will need to link the Android SDK to Unity IDE.

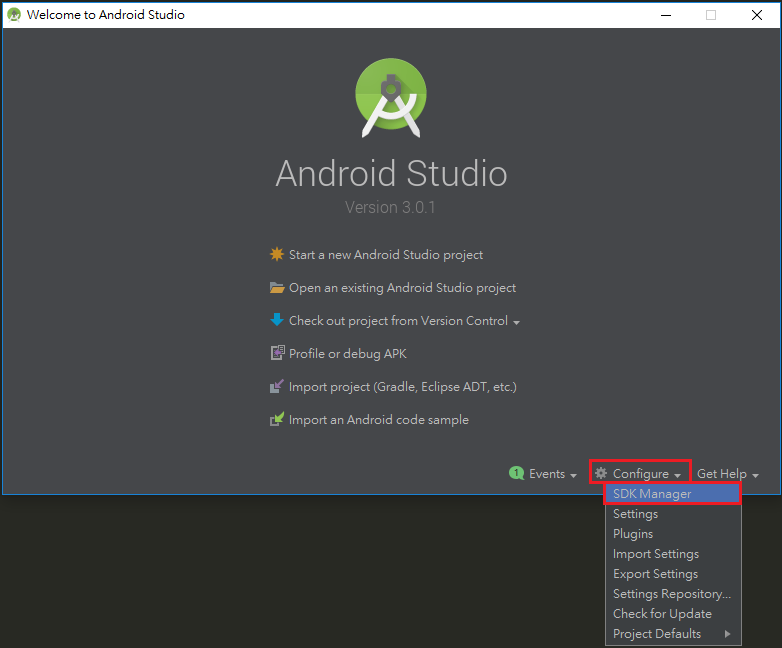

Install Android Studio.

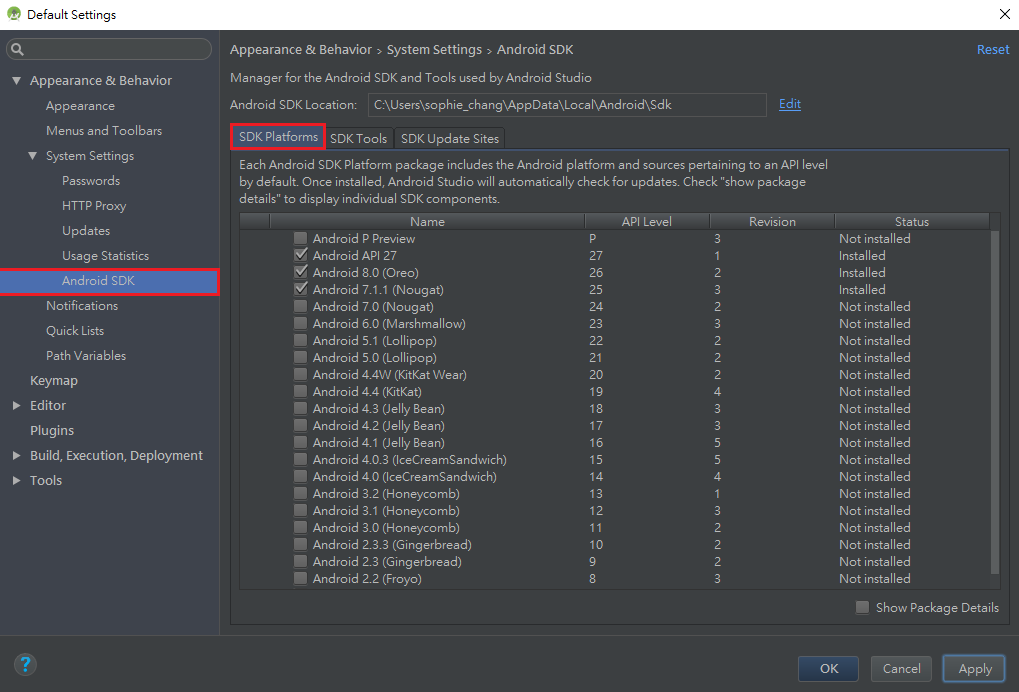

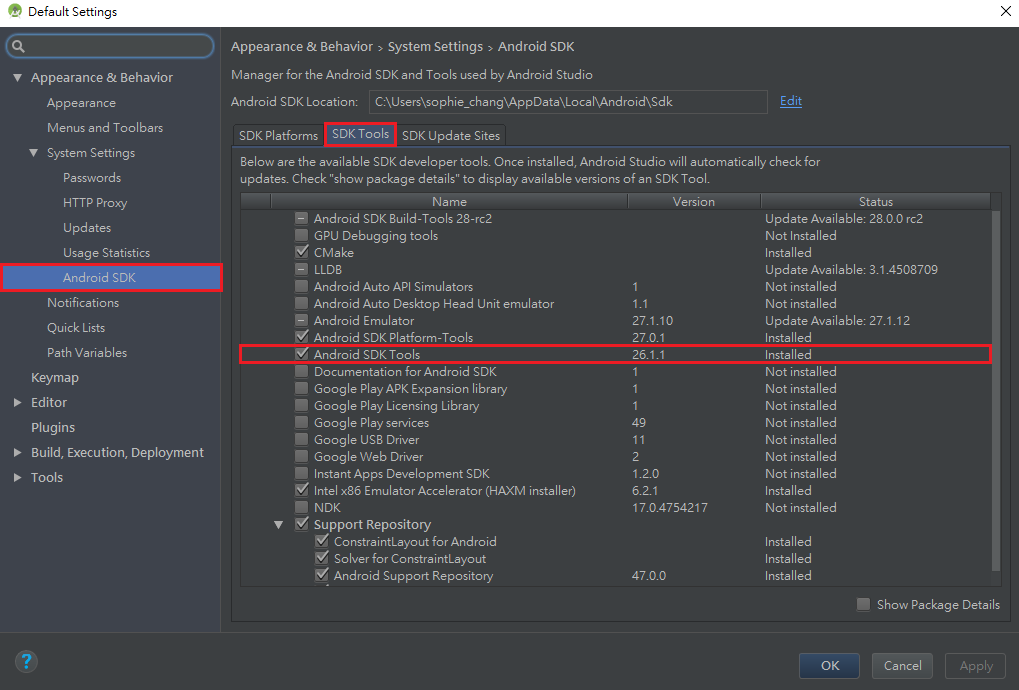

Check your Android SDK version and Android SDK Tools version in Configure > SDK Manager

- Android SDK 7.1.1 ‘Nougat’ (API level 25) or higher.

- Android SDK Tools version 25 or higher.

Install Unity.

Launch your Unity IDE.

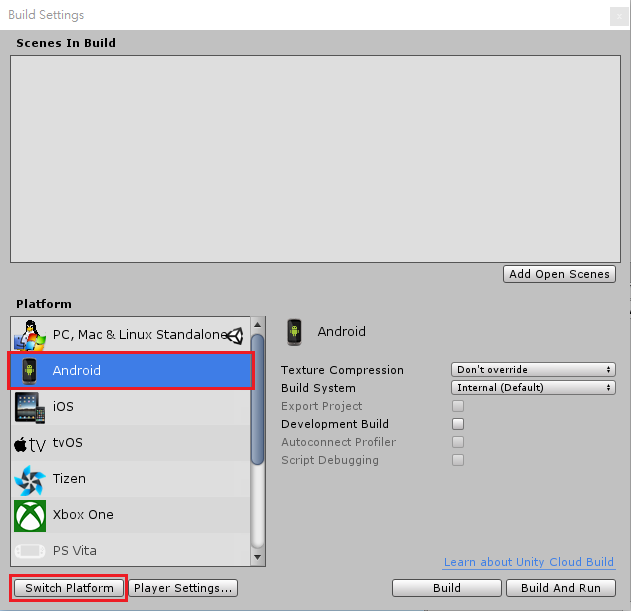

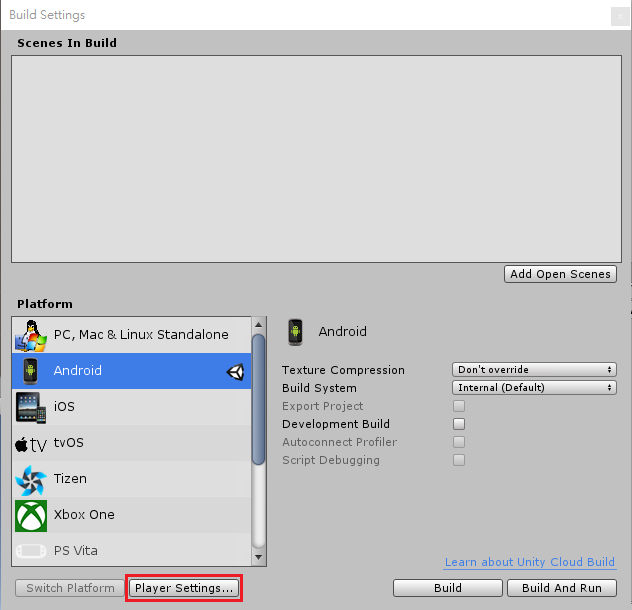

Switch platform to

Androidin File > Build Settings….Download and install Android module.

Relaunch Unity.

Switch platform to

Android

Open the configuration UI of Player Settings.

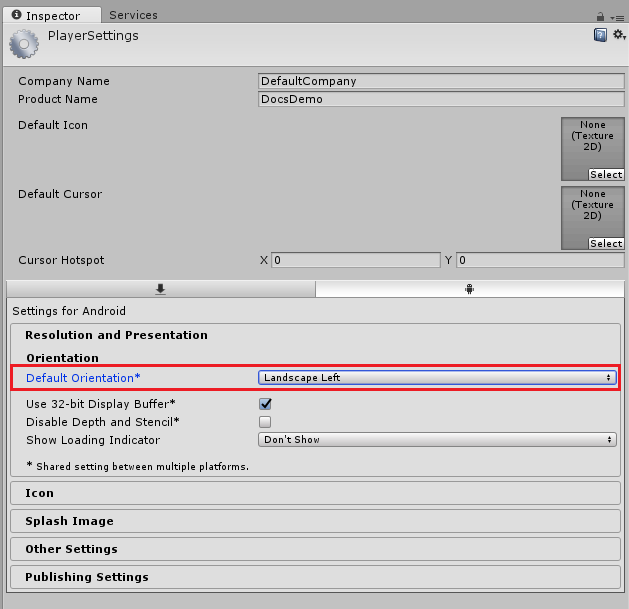

In Resolution and Presentation > Default Orientation, select

Landscape Left.Note

Default Orientation of WaveVR app MUST be

Landscape Left. Otherwise, the rendering result in your app may be wrong.

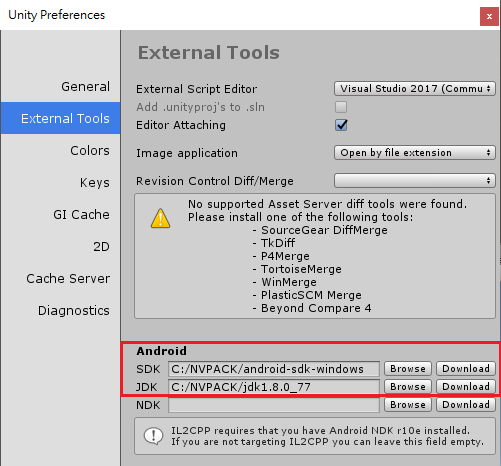

Set Android SDK and JDK paths in Edit > Preferences… > External Tools.

Import WaveVR plugin into Unity projects¶

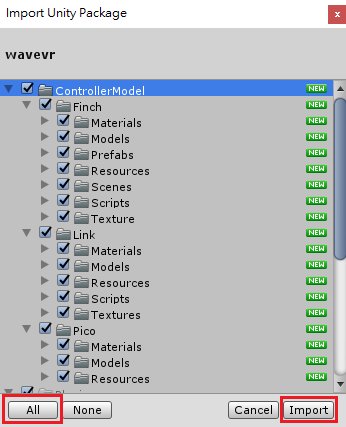

Import wvr_unity_sdk.unitypackage through Assets > Import Package > Custom Package….

Select All components, and click Import.

Note

After the importing is completed, a dialog will pop up to notify you about the suggested project settings of WaveVR. Clicking the “Accept all” button is recommended for beginners.

If you missed this dialog, you can find it out in the WaveVR Menu

Using Prefab for Stereo View¶

Delete the auto-generated camera in your scene.

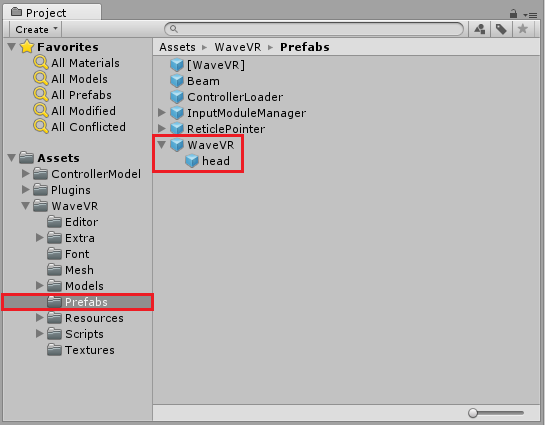

Drag and drop the

WaveVRprefab in Assets/WaveVR/Prefabs into your scene.

The prefab has a game object named head. It includes the following components:

Camerais the main camera. Its near/far value will affect both eyes’ near/far value. It helps the game view to display a monocular vision from the head’s position. Howerver, in play mode, the value of Culling Mask is Nothing, which means nothing will show on the display through this camera.WaveVR_RenderThe main script for the render lifecycle. It will create eye and ear objects. In play mode, it controls both eyes to render and display the binocular vision. The details will be provided later. All game objects of a scene should be ready before the controller is initializesd, so set the render script execution order to -100(ms). You can set the Script Execution Order in Edit > Project Settings > Script Execution Order.

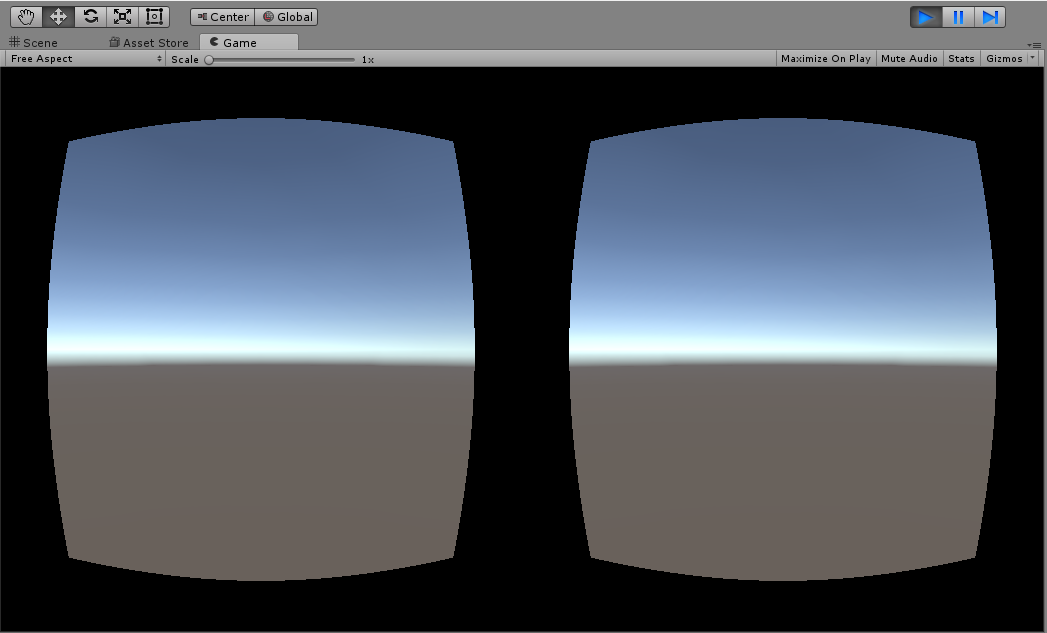

Binocular vision

WaveVR_PoseTrackerwill receive the pose event and change the game object’s transform according to the tracking device. You can choose an index to decide which device will be tracked.

Expand the Cameras¶

In play mode, the main camera will be expanded in the runtime. The following components and game objects are created and added to the head game object.

Expanded cameras

Inspector of expanded head

You can also expand the cameras by clicking on the expand button. After expanding, the created game objects can be modified.

Expand Button

In the hierarchy, the WaveVR game object takes a position like a body or a playground origin. You can place your head in a scene by moving WaveVR. Do not change the transform of the head because it will be overwritten with the HMD pose.

This is the component added in the Eye Center after expanding.

Physics_Raycaster(Unity original script)

These are the game objects added as children of the head after expanding:

- Eye Center

- Eye Both

- Eye Right

- Eye Left

- Distortion

- Ear

- Loading

Both eyes, which are represented by “Eye Right and Left”, will be based on the IPD to adjust their position to the left or right. Therefore, each eye will see from a different place. The “Eye Both” will be in the center position between two eyes, and just set different matrices into the shader for each eye when rendering. Every eye’s camera position will be set in runtime according to the device. Thus, you do not have to modify the eye transform yourself. In editor, we give a preset position to the transforms.

If the GameObject of Wave_Render has a Camera, it will be copied to Eye Center, and then be disabled.

Each eye has a camera. Its near and far clip planes’ values will be set according to the values of the Eye Center’s camera. And its projection and field of view are controlled by a projection matrix that is taken from the SDK. The plug-in will set a target texture when rendering and the default viewport should be full texture. The other values you can set to the camera are: clear flag, background, culling mask, clipping plane, allow MSAA, occlusion. All these values were copied from the main camera when creating this camera during expansion. After the first set, WaveVR will not modify them again. We do not support Dynamic Resolution and support only the Forward rendering path.

Distortion distorts both well-rendered eye textures and presents them to the display. This only works in the Unity Editor Play Mode for preview. It will be disabled when a project has been built as an app. The WaveVR compositor, which only works on a target device, will be used instead.

Ear has an audio listener.

Loading is a mask for blocking the other camera’s output on screen before the WaveVR’s graphic is initialized. Loading will be disabled as soon as the WaveVR’s graphic is initialized.

Degrees of Freedom¶

The VR device that can track a user’s rotation and position is a 6DoF device while a VR device that can only track a user’s rotation is a 3DoF device.

WaveVR supports both 3DoF and 6DoF. However, apps for 6DoF will not have the same design as apps for 3DoF. A dynamic switch may not be easy to do.

If you want to only support 3DoF, there is an option in WaveVR_PoseTracker. You should uncheck all the track position in each WaveVR_PoseTracker component and choose Tracking Space as Tracking Universe Seated in WaveVR_Render. This ensures that the head or controller position will be fixed to where you want it to be.

The PoseTracker

Choose a tracking space

If you want to support 6DoF, choose Model_Origin On Head or Model_Origin On Ground for your application. Currently, this value is set to Model_Origin On Head by default.

- Model_Origin On Head: Origin of 6DoF pose in on head (No height offset applied)

- Model_Origin On Ground: Origin of 6DoF pose is on the ground (Height offset applied)

Note

The height offset value is either 1.7 meters (default value) or obtained from height calibration when performing Room Setup if the current device supports it.