FacialExpressionMaker¶

Introduction¶

FacialExpressionMaker is a tool that makes it easy to map VRM avatar facial expressions to wave facial expressions.

Note

The FacialExpressionMaker feature is current in Experimental.

Contents |

Prerequisite¶

VRoid and ARKit VRM file support by the package VRM-0.109.0_7aff.unitypackage. The UniVRM need Unity 2020.3.40f1 and later.

How to use¶

Model Parser¶

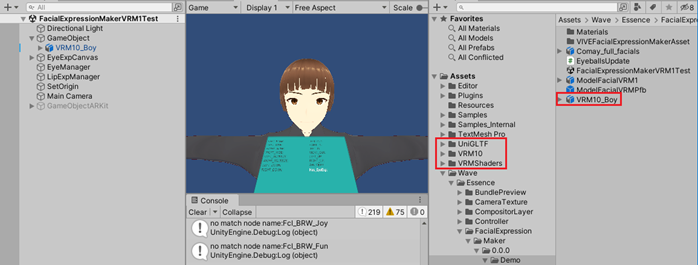

Import the VRM-0.109.0_7aff.unitypackage to your project to prepare the model parser for VRM file. After import VRM package and model file will see the folder and file in the unity.

You can open the demo scene FacialExpressionMakerVRM1Test.unity.

Note

This tool currently supports only Blendshape.

Or do this step by step:

- Import a VRM model into your project using the Model parser.

- After the Step 2~7 you will have [*]config.asset for facial expressions mapping.

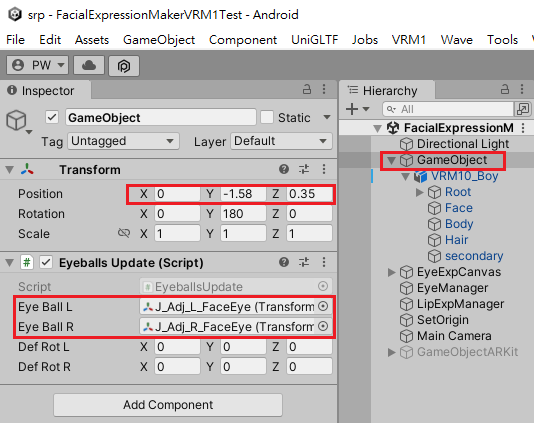

- Drag the avatar model into the GameObject of the scene.

Add the EyeballsUpdate component for eye tracking data update. Set the model eye skeleton object to EyeballsUpdate.

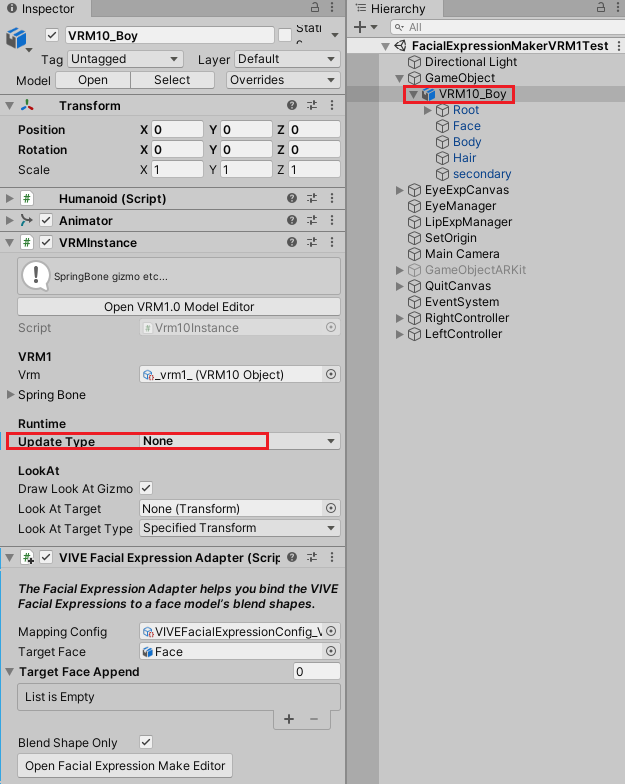

- In this model’s inspector, change UpdateType to none.

BlendShape Adapter¶

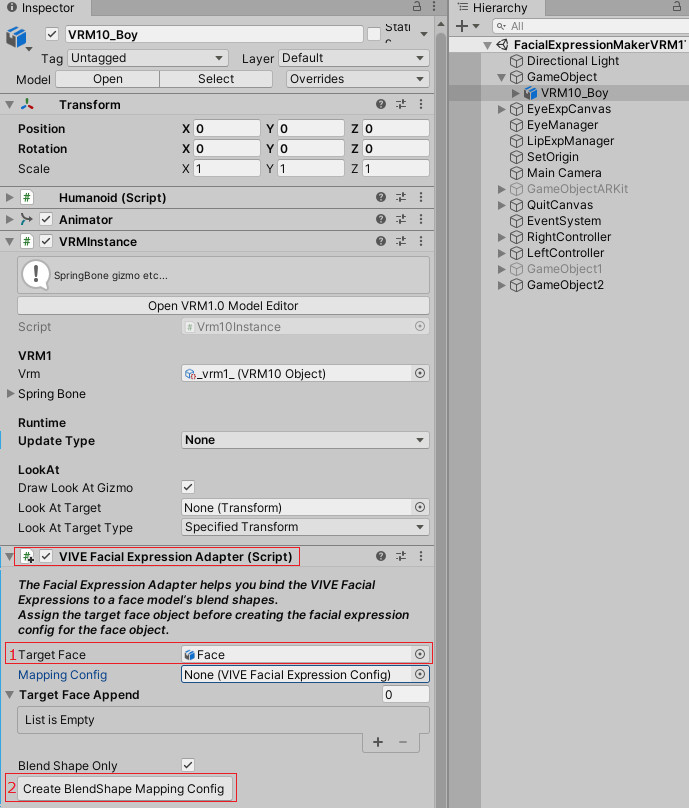

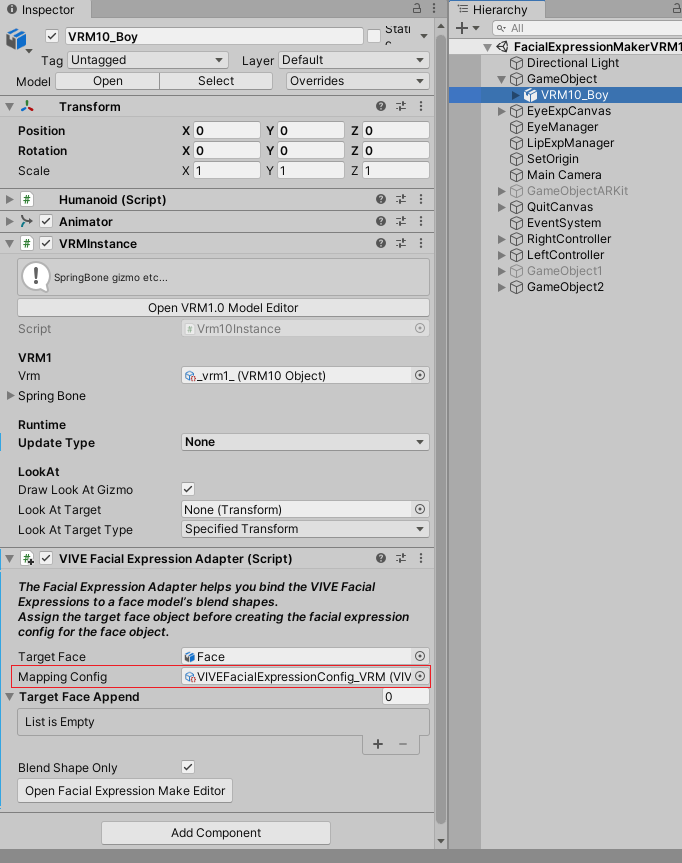

- Add the VIVEFacialTrackingMakerAdapter Component to this model. Set the Target face of the model before creating/opening blendshape mapping.

BlendShape Mapping¶

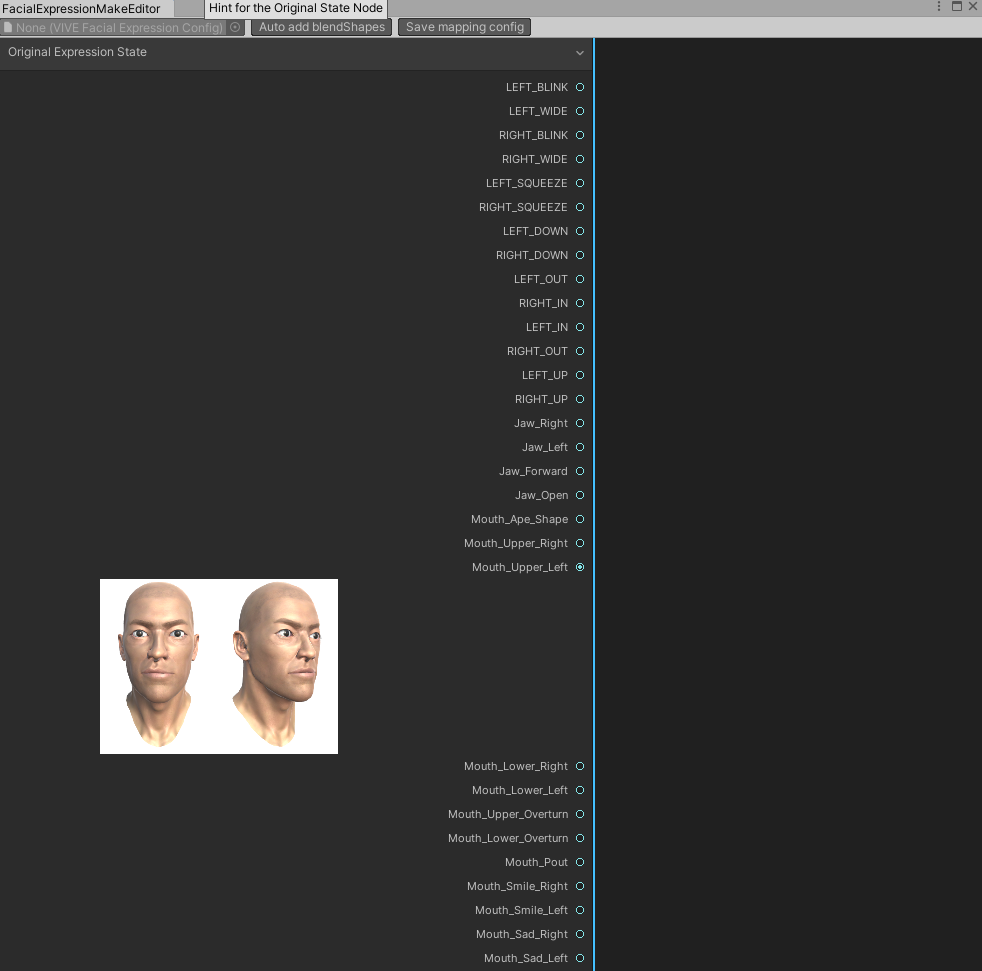

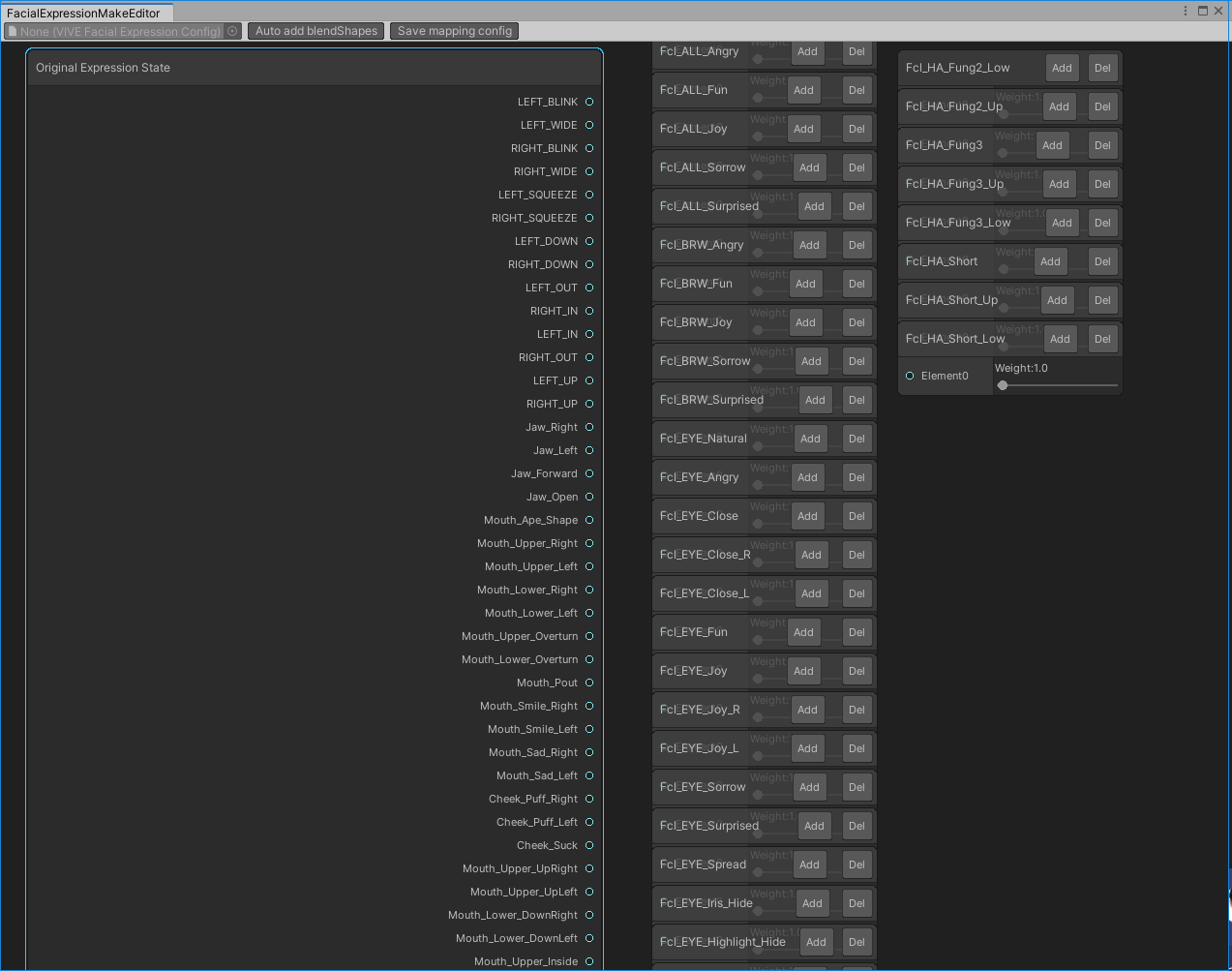

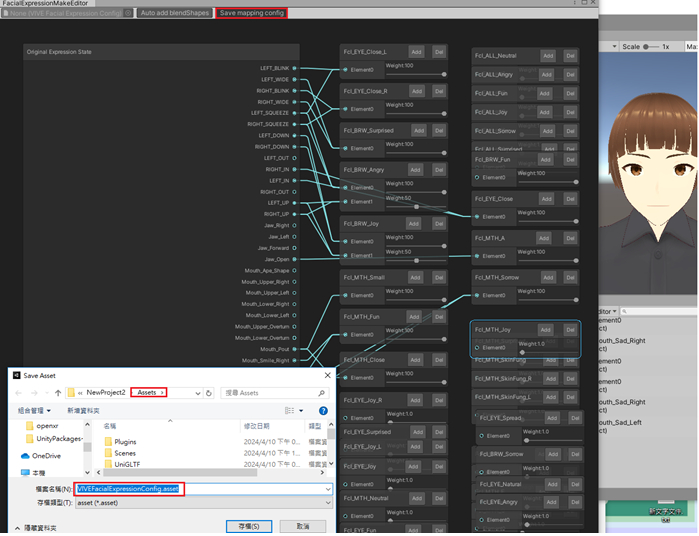

- Click Create/Open blendshape mapping config to do blendshape mapping with Wave facial expressions. A FacialExpressionMakeEditor will pop up.

- Make the FacialExpression config file and save it.

Note

Each port of the node in “Auto add blendshapes” only has a unique weight that is used to map to the mapped wave expressions.(Duplicate port weights are not allowed.)

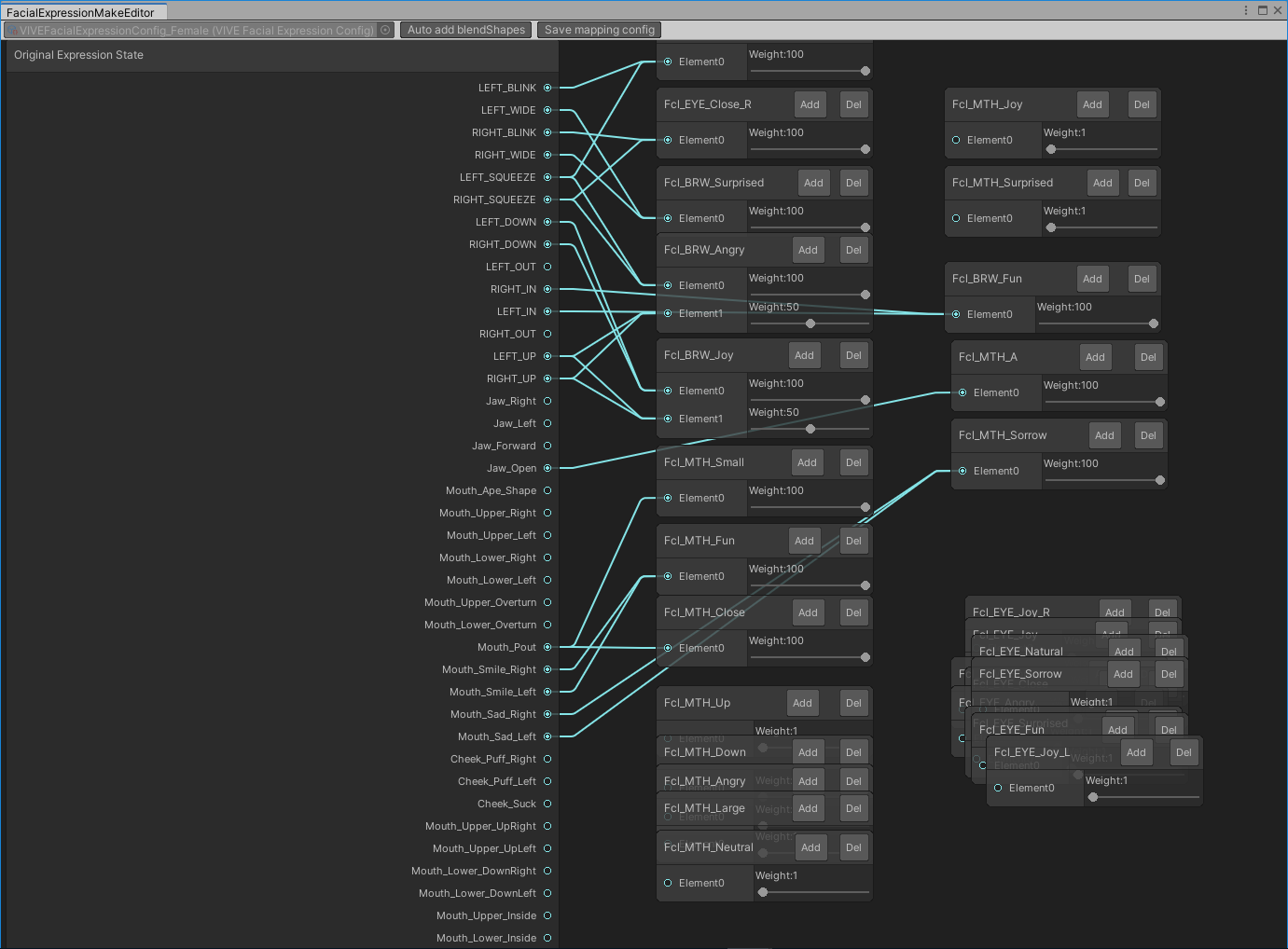

- Auto add blendshapes will generate all blend shapes of the face joint of the model. You will connect the model expression graph to the wave expressions and set specific weights. You can move blend shape node or delete redundant blend shape node when you are making the facial expressions configuration. You can also use the mouse to scale the node graph view of FacialExpressionMakeEditor. The blend shape node can add multiple weights by clicking the Add button and delete the weight by clicking the Del button. If you are unsure of the mapping, you can leave the model expressions mapped to a wave expressions and set the weight to 0.

- Save mapping config will pop up a dialog box to store the configuration asset file name and file path under the Assets folder. Please ensure that your joint information assets are configured correctly for configuration reference. If you are unsure, it is recommended to close the editor and create it again. After saving, it will be auto-assigned to VIVEFacialTrackingMakerAdapter.

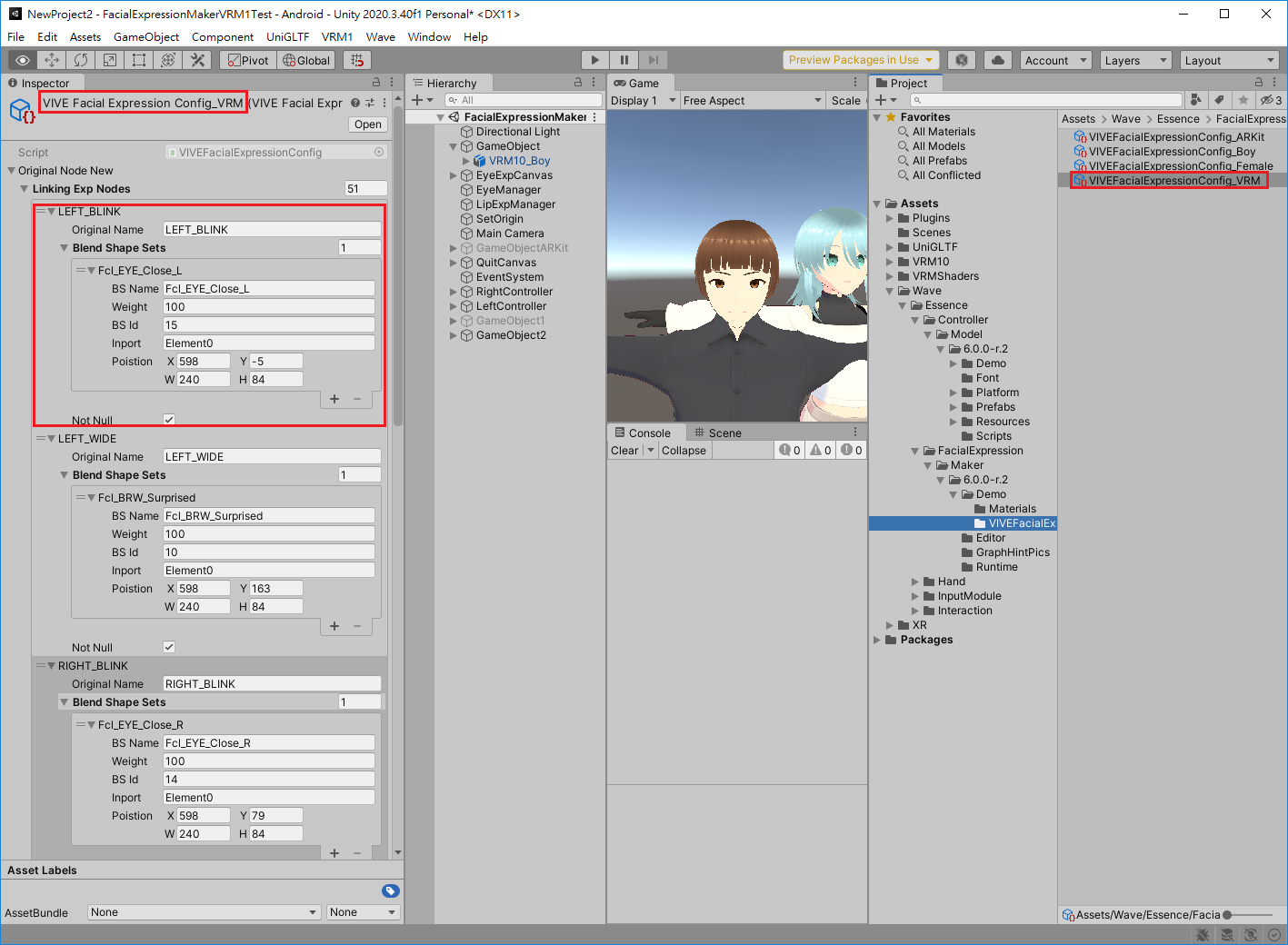

Simple mapping configuration sample by adding auto blendshapes as above.

Mapping Configuration File¶

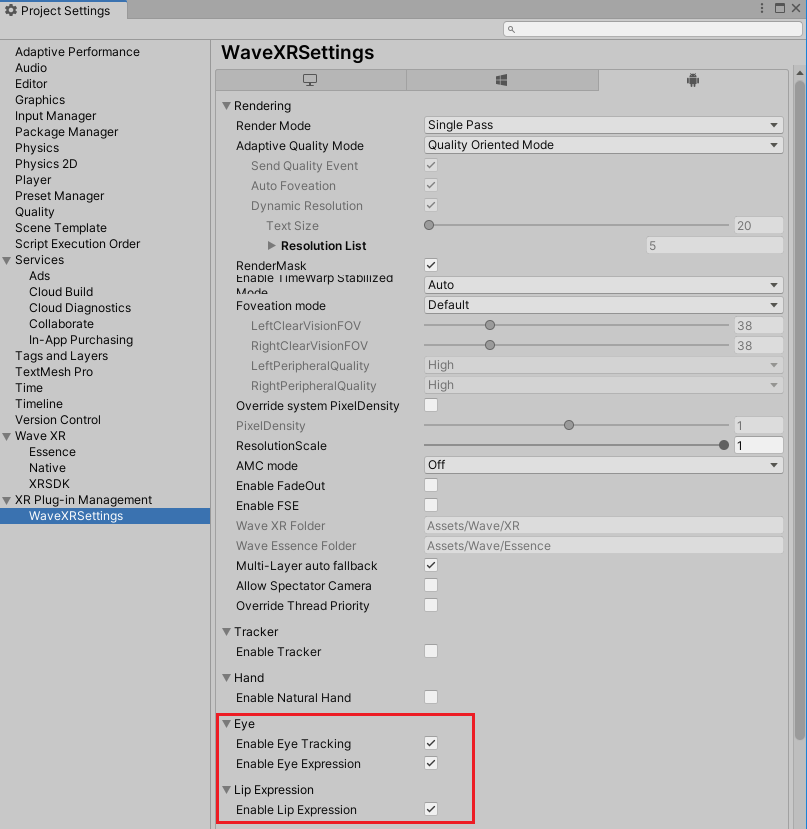

- If you have finished all the expressions you want, you can build the app to see the result. Before building, you should enable these features in settings.